Google has introduced Gemini Robotics 1.5 and Gemini Robotics-AR 1.5, new AI models that are designed for power robots that can think, plan and work responsibly. The release states that the company says “era of physical agents”, where machines go beyond reacting to commands and starting arguing about the environment.

According to a Google Deep Mind announcement, the Gemini Robotics model can deal with complex, multi -faceted tasks by combining vision, language and reasoning to provide more general purpose intelligence in robotics.

Push for a general purpose intelligent robot

Google said the release of robotics 1.5 is after the previous efforts to enhance Gemini’s multi -modal intelligence in robotics, which is marked, which is called “intelligent, truly called another step towards pursuing the robot of normal purposes.” The company positioned the launch as part of a wider effort, which was equipped with autonomous machines that need to work in complex real -world settings.

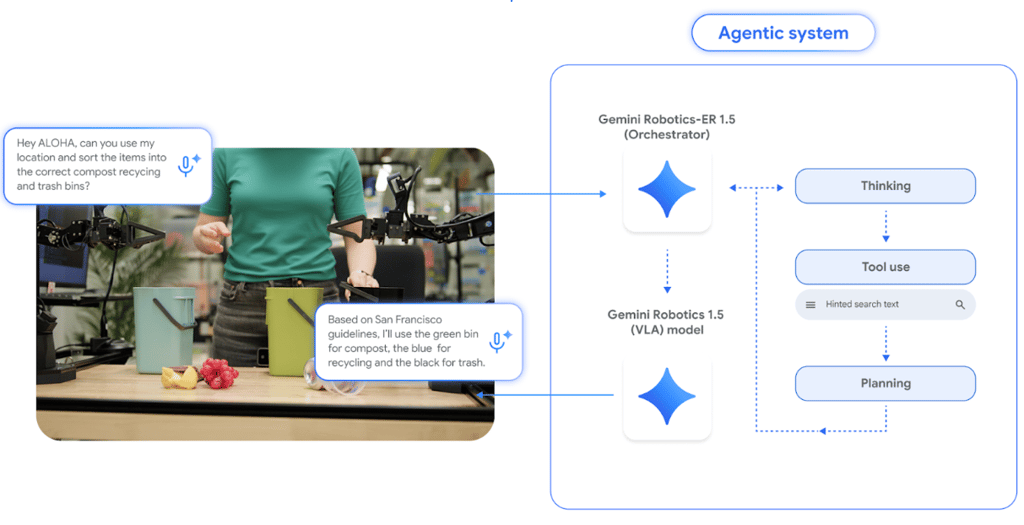

Both models divide the work between high -level planning and direct action, offering complementary capabilities that are designed to make the robot more versatile and adaptation in the real -world environment.

Gemini Robotics 1.5

Google describes Gemini Robotics 1.5 as its highly qualified vision language action model, which is designed to help think before moving the robot, instead follow the instructions. Instead of translating a command directly into motion, the AI model creates an argument process in the natural language, which allows it to make every step map and make its actions more transparent.

This approach means that a robot can handle symmetry complex applications, such as sorting laundry or managing items, breaking them into manageable measures and deciding the best way to perform them. If the environment changes or the user redirects it, it also allows the model to adjust the mid -task.

Key powers include:

- Multi -level reasoning: The ability to explain and improve actions before implementation.

- Mutual interactions: Answering everyday language and clarifying its point of view when working.

- Skills: Performing excellent motor control, such as packing paper or lunch box.

Gemini robotics can also learn in 1.5 different sculptures, transmits the behavior from one robot form to the form of a robot, whether the stationary is two -arm platform or a humanoid machine.

Gemini Robotics-AR 1.5

On the other hand, the Gemini Robotics-AR 1.5, designed to think ahead. Google calls it a sophisticated statue reasoning model, primarily a brain that orchs of robot activities and breaks wide instructions into detailed plans.

Instead of just reacting to the command like “Clean the kitchen”, 1.5 can make this task a map in the stages – cleaning counters, loading utensils, clearing surfaces – and then instructing other systems to perform them. It communicates in natural language, estimates progress, and can also call Google Search to fill the lost knowledge.

It includes its progress:

- Orchestration: Connect complex tasks by planning and assigning action.

- Local and timely reasoning: Understanding the tasks in detail and understanding the cause and impact as well as tasks.

- Benchmark performance: From identifying accuracy until the answer to the video question, 15 statue argument tests achieve advanced results in tests.

Google says ER 1.5 is the strategic layer of the system, which provides reasoning and distant concern that makes the physical robot more adaptable and reliable in the unexpected real -world settings.

Planning’s minds and acting

Google developed both models to work in tandem, with Gemini Robotics-R 1.5, handling large image plans, and following the Gemini Robotics 1.5 physical measures. The company says it allows the setup robot to take the same instruction, break it into small purposes and then process them in order.

For example, ER 1.5 can develop how to clean a room, while robotics 1.5 translates these projects into specific movements, such as lifting items or opening a container. According to Google, ER 1.5 can guide tasks as a high -level brain, while robotics 1.5 can act as hands and eyes to complete them.

To solve AGI in the physical world

Google Cast Gemini Robotics 1.5 As a milestone in solving artificial general intelligence (AGI) in the physical world, transmits the robot from command followers to systems that can reasoning, planning and functioning with reasoning.

Safety remains a fundamental part of this vision. Google said the models are associated with their AI principles, which are equipped with spiritual reasoning to evaluate the risks before acting, and support the Asimov benchmark to test the reaction in safety scenes.

Google’s robotics indicated a future in which modern machines step out of the research labs and in the fabric of ordinary life.

Robotics firms are accelerating innovation in industries, from manufacturing to health care. See which one Companies are forming the next wave of automation and AI.

The post robot which is the reason: Google’s Gemini 1.5 raised the bar.